DPAT demo application on RTDI with SmarTest 7#

About this tutorial#

In this tutorial you will learn how to:

-

SmarTest 7.x Host Controller VM

ACS Edge Server VM

In the Host Controller VM, configure pre-downloaded Apps:

Advantest demo app for ACS Edge that performs statistical data processing and configure.

SmarTest test program.

Run the SmarTest test program to utilize the Advantest demo App for DPAT on ACS Edge.

This can be based on 2 kinds of containers:

Python docker container

Jupyter Notebook Docker container

Compatibility#

SmarTest 7 / Nexus 3.1.0 / Edge 3.4.0-prod

Before you begin#

You need to request access to the ACS Container Hub, and create your own user account and project.

Procedure#

Note: The ‘adv-dpat’ project we are using below is for demonstration purposes. You will need to replace it with your own project accordingly.

1. Create RTDI virtual environment from dashboard#

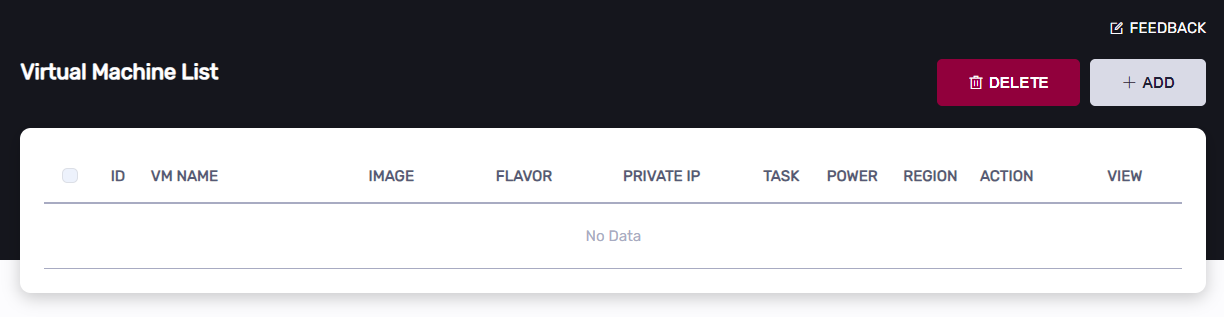

Click the “Add” button at the top right of the dashboard page.

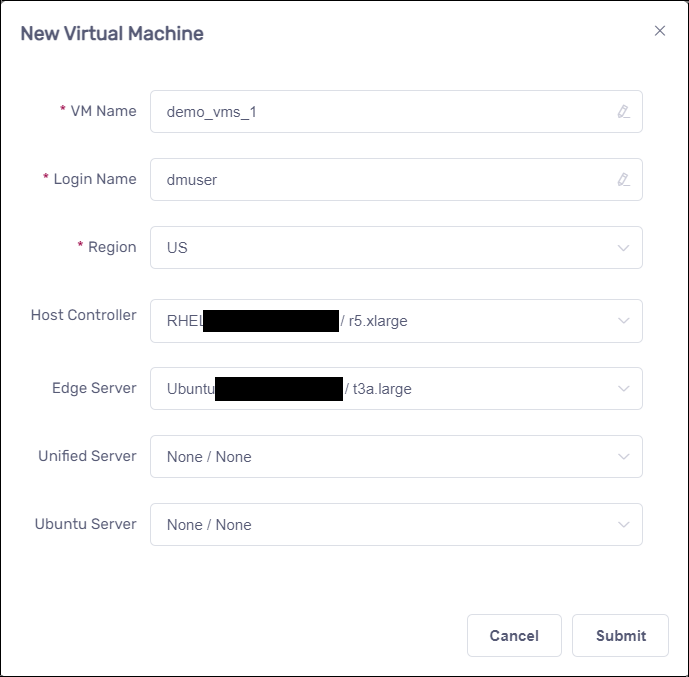

In the popped up virtual machine dialog, enter in “VM Name” and “Login Name”, select “Host Controller” and “Edge Server” version

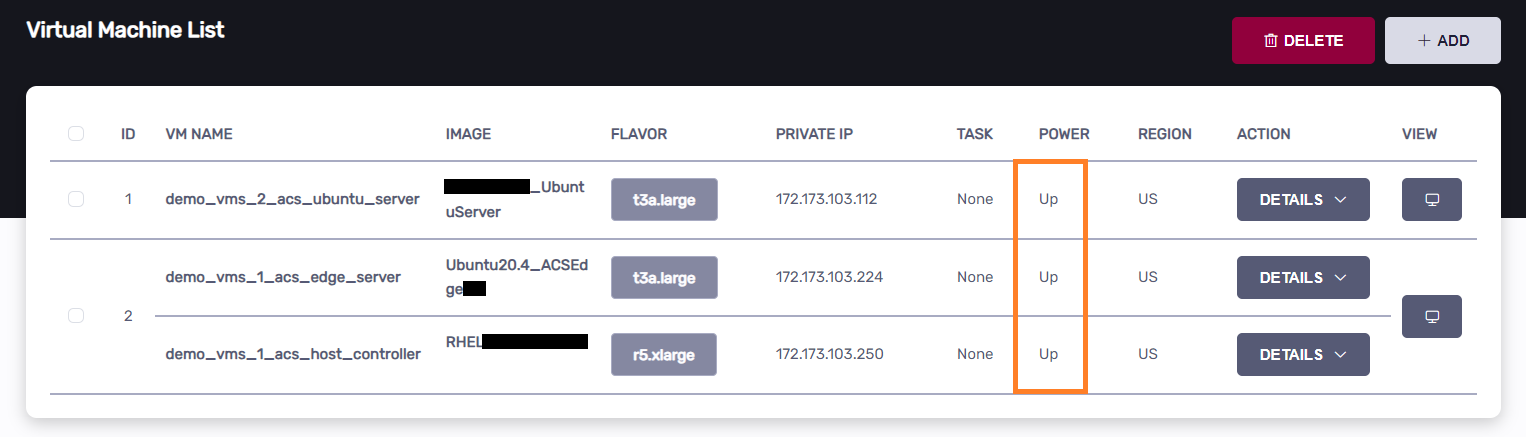

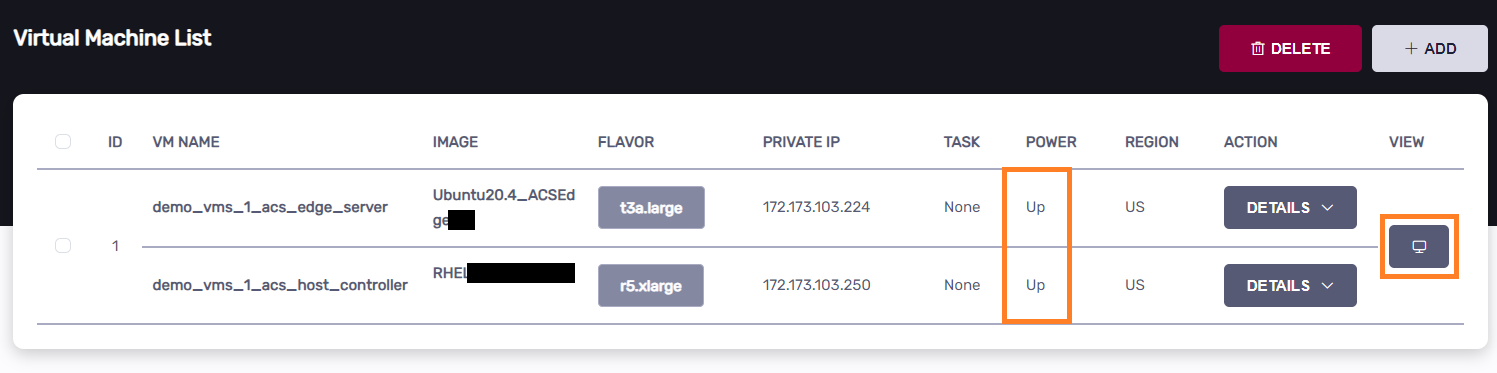

Click the “Submit” button and wait 3~5 minutes for the VMs to be created successfully, the Power State should show “Up”.

Click on “VIEW” button on the right of VM table item to enter into the Host Controller VNC GUI.

2. Transfer demo program#

Click to download the application-dpat-v3.1.0-RHEL74.tar.gz archive(a simple DPAT algorithm in Python) to your computer.

Transfer the file to the ~/apps directory on the Host Controller VM, refer to the “Transferring files” section of VM Management page.

In the VNC GUI, extract files in the bash console.

cd ~/apps/

tar zxf application-dpat-v3.1.0-RHEL74.tar.gz

3. Configure demo program#

Confirm that the demo test program directory “~/apps/application-dpat-v3.1.0/client/SMT7/demo/” exists, it will be used for running with the DPAT app.

You can find the test flow file at ~/apps/application-dpat-v3.1.0/client/SMT7/demo/testflow/testflow, where the DPAT app is called.

Click to expand!

hp93000,testflow,0.1

language_revision = 1;

information

end

-----------------------------------------------------------------

declarations

@Device_Name = "Demo_Dpat";

@Lot_Id = "123456";

@TMLimit_TestMode_JF = "FT";

end

-----------------------------------------------------------------

implicit_declarations

end

-----------------------------------------------------------------

flags

datalog_formatter = 0;

datalog_sample_size = 1;

graphic_result_displa = 1;

state_display = 0;

print_wafermap = 0;

ink_wafer = 0;

max_reprobes = 1;

temp_monitor = 1;

calib_age_monitor = 1;

diag_monitor = 1;

current_monitor = 1;

log_events_enable = 1;

set_pass_level = 0;

set_fail_level = 0;

set_bypass_level = 0;

hold_on_fail = 0;

global_hold = 0;

debug_mode = 0;

debug_analog = 0;

parallel_mode = 1;

site_match_mode = 2;

global_overon = 1;

limits_enable = 0;

test_number_enable = 1;

test_number_inc = 1;

log_cycles_before = 0;

log_cycles_after = 0;

unburst_mode = 0;

sqst_mode = 0;

warn_as_fail = 1;

use_hw_dsp = 0;

dsp_file_enable = 0;

buffer_testflow_log = 0;

check_testmethod_api = 0;

tm_crash_as_fatal = 1;

hidden_datalog_mode = 0;

multibin_mode = 0;

user Insertion_Counter = 0;

user Testflow_Variable = 12345;

user var = 0;

end

-----------------------------------------------------------------

testmethodparameters

tm_2:

"Loops" = "1";

tm_4:

"Loops" = "1";

tm_5:

"Loops" = "10";

end

-----------------------------------------------------------------

testmethodlimits

end

-----------------------------------------------------------------

testmethods

tm_10:

testmethod_class = "DPAT_user_library.UpdateNexusVariables";

tm_2:

testmethod_class = "DPAT_user_library.PAT_simulator";

tm_4:

testmethod_class = "DPAT_user_library.PAT_simulator";

tm_5:

testmethod_class = "DPAT_user_library.PAT_simulator";

tm_8:

testmethod_class = "DPAT_user_library.DPAT_ACSEgde_New_Limits";

end

-----------------------------------------------------------------

test_suites

ACSEdge_DPAT_Limit_Change:

override = 1;

override_testf = tm_8;

comment = "This will create a docker container from and image";

local_flags = output_on_pass, output_on_fail, value_on_pass, value_on_fail, per_pin_on_pass, per_pin_on_fail;

site_match = 2;

site_control = "parallel:";

TestA:

override = 1;

override_testf = tm_4;

local_flags = set_pass, output_on_pass, output_on_fail, value_on_pass, value_on_fail, per_pin_on_pass, per_pin_on_fail;

site_match = 0;

site_control = "parallel:";

TestB:

override = 1;

override_testf = tm_5;

local_flags = output_on_pass, output_on_fail, value_on_pass, value_on_fail, per_pin_on_pass, per_pin_on_fail;

site_match = 0;

site_control = "parallel:";

TestC:

override = 1;

override_testf = tm_2;

local_flags = set_pass, output_on_pass, output_on_fail, value_on_pass, value_on_fail, per_pin_on_pass, per_pin_on_fail;

site_match = 0;

site_control = "parallel:";

UpdateNexusVariables:

override = 1;

override_testf = tm_10;

local_flags = output_on_pass, output_on_fail, value_on_pass, value_on_fail, per_pin_on_pass, per_pin_on_fail;

site_match = 2;

site_control = "parallel:";

end

-----------------------------------------------------------------

test_flow

run(UpdateNexusVariables);

run_and_branch(ACSEdge_DPAT_Limit_Change)

then

{

}

else

{

multi_bin;

}

run_and_branch(TestA)

then

{

}

else

{

multi_bin;

}

run_and_branch(TestB)

then

{

}

else

{

multi_bin;

}

run_and_branch(TestC)

then

{

}

else

{

multi_bin;

}

if 1 then

{

}

else

{

}

stop_bin "1", "Good Bin", , good, noreprobe, green, 1, not_over_on;

end

-----------------------------------------------------------------

binning

otherwise bin = "db", "", , bad, noreprobe, red, , not_over_on;

end

-----------------------------------------------------------------

context

context_config_file = "pins";

context_levels_file = "levels";

context_timing_file = "Timing";

context_testtable_file = "FT_Limits.mfh";

end

-----------------------------------------------------------------

hardware_bin_descriptions

1 = "Good Bin";

end

Navigate to the DPAT app directory(~/apps/application-dpat-v3.1.0/rd-app_dpat_py), you can find these files

Dockerfile: this is used for building DPAT app docker image

Click to expand!

FROM registry.advantest.com/adv-dpat/python39-basic:2.0 RUN yum install -y openssh-server RUN mkdir /var/run/sshd RUN echo 'root:root123' | chpasswd RUN sed -i 's/PermitRootLogin prohibit-password/PermitRootLogin yes/' /etc/ssh/sshd_config RUN sed 's@session\s*required\s*pam_loginuid.so@session optional pam_loginuid.so@g' -i /etc/pam.d/sshd EXPOSE 22 # set nexus env variables WORKDIR / RUN python3.9 -m pip install pandas jsonschema # copy run files and directories RUN mkdir -p /dpat-app;chmod a+rwx /dpat-app RUN mkdir -p /dpat-app/data;chmod a+rwx /dpat-app/data RUN touch dpat-app/__init__.py COPY workdir /dpat-app/workdir COPY conf /dpat-app/conf # RUN yum -y install libunwind-devel RUN echo 'export $(cat /proc/1/environ |tr "\0" "\n" | xargs)' >> /etc/profile ENV LOG_FILE_PATH "/tmp/app.log" ENV DFF_FLAG="False" WORKDIR /dpat-app/workdir RUN /usr/bin/ssh-keygen -A RUN echo "python3.9 -u /dpat-app/workdir/run_dpat.py&" >> /start.sh RUN echo "/usr/sbin/sshd -D" >> /start.sh CMD sh /start.sh

workdir/run_dpat.py: this is the main entry point for the DPAT app

Click to expand!

""" Copyright 2023 ADVANTEST CORPORATION. All rights reserved Dynamic Part Average Testing This module allows the user to calculate new limits based on dynamic part average testing. It is assumed that this is being run on the docker container with the Nexus connection. """ import json from AdvantestLogging import logger from dpat import DPAT from oneapi import OneAPI, send_command def run(nexus_data, args, save_result_fn): """Callback function that will be called upon receiving data from OneAPI Args: nexus_data: Datalog coming from nexus args: Arguments set by user """ base_limits = args["baseLimits"] dpat = args["DPAT"] # persistent class # check if all test ids have been processed (similar to wafer or lot end) # if nexus_data.shape[0] % len(nexus_data.TestId.unique()) == 0: dpat.compute_once(nexus_data, base_limits, logger, args) results_df, new_limits = dpat.datalog() # logger.info(new_limits) if args["VariableControl"] == True: send_command(new_limits, "VariableControl") save_result_fn(results_df) def main(): """Set logger and call OneAPI""" logger.info("Starting DPAT datacolection and computation") logger.info("Copyright 2022 - Advantest America Inc") with open("data/base_limits.json", encoding="utf-8") as json_file: base_limits = json.load(json_file) args = { "DPAT": DPAT(), "baseLimits": base_limits, "config_path": "../conf/test_suites.ini", "setPathStorage": "data", "setPrefixStorage": "Demo_Dpat_123456", "saveStat": True, "VariableControl": False, # Note: smt version is not visible from run_dpat, so it is set in sample.py in consumeLotStart } logger.info("Starting OneAPI") oneapi = OneAPI(callback_fn=run, callback_args=args) oneapi.start() if __name__ == "__main__": main()

workdir/dpat.py: this is the core algorithm for DPAT calculation

Click to expand!

""" Copyright 2023 ADVANTEST CORPORATION. All rights reserved This module contains a class and modules that allow the user to calculate new limits based on dynamic part average testing. It is assumed that this is being run on the docker container with the Nexus connection, being called by run_dpat.py. """ import time from typing import Dict, List import numpy as np import pandas as pd class DPAT: """ This class allows the user to calculate new limits based on dynamic part average testing. It is assumed that this is being run on the docker container with the Nexus connection. Contains the following methods: * save_stat(results, df_cumulative_raw, args) * check_new_limits(df_new_limits) * save_stat(results, df_cumulative_raw, args) * stdev_compute(df_cumulative_raw, return_columns) * stdev_full_compute(df_stdev_full, return_columns) * iqr_full_compute(df_iqr_full, return_columns) * iqr_window_compute(df_iqr_full, return_columns) * stdev_compute(df_cumulative_raw, return_columns) * compute(df_base_limits, df_cumulative_raw, args) * datalog(nexus_data, df_base_limits, df_cumulative_raw, args) * run(data, args) * main - the main function of the script """ def __init__(self, ): self.counter = 0 def compute_once(self, nexus_data: pd.DataFrame, base_limits: pd.DataFrame, logger, args): """Preprocess data, setting base values and columns. Should run only once. Args: nexus_data: string with pin values coming from nexus base_limits: DataFrame with base limits and parameters args: Dictionary with arguments set on main by user """ self.counter += 1 self.nexus_data = nexus_data self.args = args self.logger = logger self.df_base_limits, self.df_cumulative_raw = self.preprocess_data(base_limits) def save_stat( self, results: pd.DataFrame, df_cumulative_raw: pd.DataFrame, args: Dict ): """Save new limits and raw file into csv. Args: df_base_limits: DataFrame with base limits and parameters df_cumulative_raw: DataFrame with base values and columns args: Dictionary with argsurations parameters set from user """ self.logger.info("=> Start save_stat") set_path_storage = args.get("setPathStorage") set_prefix_storage = args.get("setPrefixStorage") file_to_save_raw = set_path_storage + "/" + set_prefix_storage + "_raw.csv" file_to_save = set_path_storage + "/" + set_prefix_storage + "_stdev.csv" df_cumulative_raw.to_csv(file_to_save_raw, mode="a", index=False) results.to_csv(file_to_save, mode="a", index=False) self.logger.info("=> End save_stat") def check_new_limits(self, df_new_limits: pd.DataFrame) -> List: """Check new limits against existing thresholds and return ids that are off limits. The ids that don't meet the criteria are resetted to base limits. Args: df_new_limits: DataFrame calculated limits Return: test_ids: List with test_ids that are off the base limits """ # New limits that are below user defined threshold below_threshold = df_new_limits[ (df_new_limits["limit_Usl"] - df_new_limits["limit_Lsl"]) < (df_new_limits["PassRangeUsl"] - df_new_limits["PassRangeLsl"]) * df_new_limits["DPAT_Threshold"] ] # New limits that are below base lower limit below_lsl = df_new_limits[ (df_new_limits["N"] < df_new_limits["DPAT_Samples"]) | (df_new_limits["limit_Lsl"] < df_new_limits["PassRangeLsl"]) * df_new_limits["DPAT_Threshold"] ] # New limits that are above base upper limit above_usl = df_new_limits[ (df_new_limits["N"] < df_new_limits["DPAT_Samples"]) | (df_new_limits["limit_Usl"] > df_new_limits["PassRangeUsl"]) * df_new_limits["DPAT_Threshold"] ] test_ids = [] if not above_usl.empty: test_ids = above_usl.TestId.unique().tolist() if not below_lsl.empty: test_ids = test_ids + below_lsl.TestId.unique().tolist() if not below_threshold.empty: test_ids = test_ids + below_threshold.TestId.unique().tolist() return list(set(test_ids)) # return unique testd_ids def stdev_full_compute( self, df_stdev_full: pd.DataFrame, return_columns: List ) -> pd.DataFrame: """Compute stdev method for specific test_ids. It updates the lower and upper limits following this formula: LPL = Mean - <dpat_sigma> * Sigma UPL = Mean + <dpat_sigma> * Sigma, Where <dpat_sigma> is an user defined parameter. Args: df_stdev_full: DataFrame with base values and columns return_columns: Columns to filter returned dataframe on Return: df_result: DataFrame with calculated stdev full and specific return columns """ df_stdev_full.loc[:, 'N'] = \ df_stdev_full.groupby('TestId')['TestId'].transform('count').values df_n = df_stdev_full[df_stdev_full["N"] > 1] df_one = df_stdev_full[df_stdev_full["N"] <= 1] mean = df_n.groupby("TestId")["PinValue"].transform("mean") stddev = df_n.groupby("TestId")["PinValue"].transform(lambda x: np.std(x, ddof=1)) # Calculate new limits according to stdev sample df_n.loc[:, "stdev_all"] = stddev.values df_n.loc[:, "limit_Lsl"] = (mean - (stddev * df_n["DPAT_Sigma"])).values df_n.loc[:, "limit_Usl"] = (mean + (stddev * df_n["DPAT_Sigma"])).values # Assign base limits to test_ids with a single instance df_one.loc[:, "limit_Lsl"] = df_one["PassRangeLsl"].values df_one.loc[:, "limit_Usl"] = df_one["PassRangeUsl"].values df_one.loc[:, "stdev_all"] = 0 df_result = pd.concat([df_one, df_n])[return_columns].drop_duplicates() return df_result def stdev_window_compute( self, df_stdev_window: pd.DataFrame, return_columns: List ) -> pd.DataFrame: """Compute stdev method for a specific window of test_ids. The mean and sigma are calculated for that window, and then it updates the lower and upper limits following this formula: LPL = Mean - <dpat_sigma> * Sigma UPL = Mean + <dpat_sigma> * Sigma, Where <dpat_sigma> is an user defined parameter. Args: df_stdev_window: DataFrame with base values and columns return_columns: Columns to filter returned dataframe on Return: df_result: DataFrame with calculated stdev window and specific return columns """ df_stdev_window.loc[:, 'N'] = \ df_stdev_window.groupby('TestId')['TestId'].transform('count').values # Separate test_ids with more than one sample df_n = df_stdev_window[df_stdev_window["N"] > 1] df_one = df_stdev_window[df_stdev_window["N"] <= 1] # Slice df according to window_size df_stdev_window.loc[:, 'N'] = df_stdev_window["DPAT_Window_Size"].values df_window_size = df_n.groupby("TestId").apply( lambda x: x.iloc[int(-x.N.values[0]):] ).reset_index(drop=True) if not df_n.empty: # Calculate new limits mean = df_window_size.groupby("TestId")["PinValue"].transform("mean").values stddev = df_window_size.groupby("TestId")["PinValue"].transform( lambda x: np.std(x, ddof=1) ).values df_n.loc[:, "stdev_all"] = stddev df_n.loc[:, "limit_Lsl"] = (mean - (stddev * df_n["DPAT_Sigma"])).values df_n.loc[:, "limit_Usl"] = (mean + (stddev * df_n["DPAT_Sigma"])).values # Assign base limits to test_ids with a single instance df_one.loc[:, "limit_Lsl"] = df_one["PassRangeLsl"].values df_one.loc[:, "limit_Usl"] = df_one["PassRangeUsl"].values df_one.loc[:, "stdev_all"] = 0 df_result = pd.concat([df_n, df_one])[return_columns].drop_duplicates() return df_result def iqr_full_compute( self, df_iqr_full: pd.DataFrame, return_columns: List ) -> pd.DataFrame: """ Update the lower and upper limits following this formula: IQR = Upper Quartile(Q3) - Lower Quartile(Q1) LSL = Q1 - 1.5 IQR USL = Q3 + 1.5 IQR Args: df_iqr_full: DataFrame with base values and columns return_columns: Columns to filter returned dataframe on Return: df_result: DataFrame with calculated IQR full and specific return columns """ window_size = df_iqr_full["DPAT_Window_Size"] df_iqr_full.loc[:, "N"] = window_size df_iqr_full.loc[:, 'N'] = df_iqr_full.groupby('TestId')['TestId'].transform('count') # Separate test_ids with more than one sample df_n = df_iqr_full[df_iqr_full["N"] > 1] df_one = df_iqr_full[df_iqr_full["N"] <= 1] if not df_n.empty: # Compute new limits first_quartile = df_n.groupby("TestId").PinValue.quantile(q=0.25) upper_quartile = df_n.groupby("TestId").PinValue.quantile(q=0.75) iqr = upper_quartile - first_quartile multiple = df_n.groupby("TestId")["DPAT_IQR_Multiple"].first() df_n = df_n[return_columns].drop_duplicates() df_n.loc[:, "limit_Lsl"] = (first_quartile - (multiple * iqr)).values df_n.loc[:, "limit_Usl"] = (upper_quartile + (multiple * iqr)).values df_n.loc[:, "q1_all"] = first_quartile.values df_n.loc[:, "q3_all"] = upper_quartile.values # Assign base limits to test_ids with a single instance df_one.loc[:, "limit_Lsl"] = df_one["PassRangeLsl"].values df_one.loc[:, "limit_Usl"] = df_one["PassRangeUsl"].values df_one["q1_all"] = df_one["q1_all"].astype('float64') df_one["q3_all"] = df_one["q3_all"].astype('float64') df_one.loc[:, "q1_all"] = np.nan df_one.loc[:, "q3_all"] = np.nan df_result = pd.concat([df_n, df_one]) return df_result[return_columns] def iqr_window_compute( self, df_iqr_window: pd.DataFrame, return_columns: List ) -> pd.DataFrame: """Before calculating the limits, it gets slices the df according to the <window_size>. Then it updates the lower and upper limits following this formula: IQR = Upper Quartile(Q3) - Lower Quartile(Q1) LSL = Q1 - 1.5 IQR USL = Q3 + 1.5 IQR Args: df_iqr_window: DataFrame with base values and columns return_columns: Columns to filter returned dataframe on Return: df_result: DataFrame with calculated IQR window and specific return columns """ window_size = df_iqr_window["DPAT_Window_Size"].values df_iqr_window.loc[:, "window_size"] = window_size df_iqr_window.loc[:, 'N'] = \ df_iqr_window.groupby('TestId')['TestId'].transform('count').values # Separate test_ids with more than one sample df_n = df_iqr_window[df_iqr_window["N"] > 1] df_one = df_iqr_window[df_iqr_window["N"] <= 1] if not df_n.empty: # Slice df according to window_size df_window_size = df_n.groupby("TestId").apply( lambda x: x.iloc[int(-x.window_size.values[0]):] ).reset_index(drop=True) # Calculate IQR first_quartile = df_window_size.groupby(["TestId"]).PinValue.quantile(q=0.25) upper_quartile = df_window_size.groupby(["TestId"]).PinValue.quantile(q=0.75) iqr = upper_quartile - first_quartile multiple = df_n.groupby("TestId")["DPAT_IQR_Multiple"].first() df_n = df_n[return_columns].drop_duplicates() # Calculate new limits df_n.loc[:, "limit_Lsl"] = (first_quartile - (multiple * iqr)).values df_n.loc[:, "limit_Usl"] = (upper_quartile + (multiple * iqr)).values df_n.loc[:, "q1_window"] = first_quartile.values df_n.loc[:, "q3_window"] = upper_quartile.values # Assign base limits to test_ids with a single instance df_one.loc[:, "limit_Lsl"] = df_one["PassRangeLsl"].values df_one.loc[:, "limit_Usl"] = df_one["PassRangeUsl"].values df_one["q1_window"] = df_one["q1_window"].astype('float64') df_one["q3_window"] = df_one["q3_window"].astype('float64') df_one.loc[:, "q1_window"] = np.nan df_one.loc[:, "q3_window"] = np.nan df_result = pd.concat([df_n, df_one]) return df_result[return_columns] def stdev_sample_compute( self, df_cumulative_raw: pd.DataFrame, return_columns: List ) -> pd.DataFrame: """ Updates the lower and upper limits following this formula: LSL = Mean - <multiplier> * Sigma USL = Mean + <multiplier> * Sigma Args: df_cumulative_raw: DataFrame with base values and columns return_columns: Columns to filter returned dataframe on Return: df_stdev: DataFrame with calculated stdev and specific return columns """ df_stdev = df_cumulative_raw[ (df_cumulative_raw["DPAT_Type"] == "STDEV") & (df_cumulative_raw["nbr_executions"] > 1) ] df_stdev_one = df_cumulative_raw[ (df_cumulative_raw["DPAT_Type"] == "STDEV") & (df_cumulative_raw["nbr_executions"] <= 1) ] df_stdev.loc[:, "stdev"] = ( ( df_stdev["nbr_executions"] * df_stdev["sum_of_squares"] - df_stdev["sum"] * df_stdev["sum"] ) / ( df_stdev["nbr_executions"] * (df_stdev["nbr_executions"] - 1) ) ).pow(1./2).values mean = ( df_stdev["sum"] / df_stdev["nbr_executions"] ) df_stdev.loc[:, "limit_Lsl"] = (mean - (df_stdev["stdev"] * df_stdev["DPAT_Sigma"])).values df_stdev.loc[:, "limit_Usl"] = (mean + (df_stdev["stdev"] * df_stdev["DPAT_Sigma"])).values df_stdev_one.loc[:, "limit_Lsl"] = df_stdev_one["PassRangeLsl"].values df_stdev_one.loc[:, "limit_Usl"] = df_stdev_one["PassRangeUsl"].values df_stdev = pd.concat([df_stdev, df_stdev_one])[return_columns] return df_stdev def compute( self, df_base_limits: pd.DataFrame, df_cumulative_raw: pd.DataFrame, args: Dict ) -> pd.DataFrame: """Compute new limits with standard deviation and IQR methods Args: df_base_limits: DataFrame with base limits and parameters df_cumulative_raw: DataFrame with base values and columns args: Dictionary with args parameters set from user Returns: new_limits: DataFrame with new limits after compute """ self.logger.info("=>Starting Compute") # define base columns and fill with NANs df_cumulative_raw["stdev_all"] = np.nan df_cumulative_raw["stdev_window"] = np.nan df_cumulative_raw["stdev"] = np.nan df_cumulative_raw["q1_all"] = np.nan df_cumulative_raw["q3_all"] = np.nan df_cumulative_raw["q1_window"] = np.nan df_cumulative_raw["q3_window"] = np.nan df_cumulative_raw["N"] = np.nan df_cumulative_raw["limit_Lsl"] = np.nan df_cumulative_raw["limit_Usl"] = np.nan return_columns = [ "TestId", "stdev_all", "stdev_window", "stdev", "q1_all", "q3_all", "q1_window", "q3_window", "N", "limit_Lsl", "limit_Usl", "PassRangeUsl", "PassRangeLsl" ] in_time = time.time() # Updates the lower and upper limits following this formula: # LSL = Mean - <multiplier> * Sigma (sample) # USL = Mean + <multiplier> * Sigma (sample) df_stdev = self.stdev_sample_compute(df_cumulative_raw, return_columns) # Updates the lower and upper limits according to sigma and mean df_stdev_full = df_cumulative_raw[df_cumulative_raw["DPAT_Type"] == "STDEV_FULL"].copy() df_stdev_full = self.stdev_full_compute(df_stdev_full, return_columns) # Updates limits after getting a sample of <window_size>. df_stdev_window = df_cumulative_raw[ df_cumulative_raw["DPAT_Type"] == "STDEV_RUNNING_WINDOW" ].copy() df_stdev_window = self.stdev_window_compute(df_stdev_window, return_columns) # Calculates limits based on Interquartile range. # IQR = Upper Quartile(Q3) - Lower Quartile(Q1) # LSL = Q1 - 1.5 IQR # USL = Q3 + 1.5 IQR df_iqr_full = df_cumulative_raw[df_cumulative_raw["DPAT_Type"] == "IQR_FULL"].copy() df_iqr_full = self.iqr_full_compute(df_iqr_full, return_columns) # Gets a sample of the dataframe of <window_size> and then uses IQR df_iqr_window = df_cumulative_raw[df_cumulative_raw["DPAT_Type"] == "IQR_RUNNING_WINDOW"].copy() df_iqr_window = self.iqr_window_compute(df_iqr_window, return_columns) # Concatenates the 5 methods in one dataframe new_limits_df = pd.concat( [df_stdev, df_stdev_full, df_stdev_window, df_iqr_full, df_iqr_window] ).sort_values(by = "TestId").reset_index(drop=True) # Check for test_ids that don't meet the criteria and are off limits test_ids_off_limits = self.check_new_limits(new_limits_df.merge(df_base_limits)) if len(test_ids_off_limits) > 0: # Sets the off limits ids to the base values new_limits_df.loc[new_limits_df.TestId.isin(test_ids_off_limits), "limit_Usl"] = \ new_limits_df["PassRangeUsl"] new_limits_df.loc[new_limits_df.TestId.isin(test_ids_off_limits), "limit_Lsl"] = \ new_limits_df["PassRangeLsl"] new_limits_df["PassRangeLsl"] = new_limits_df["limit_Lsl"] new_limits_df["PassRangeUsl"] = new_limits_df["limit_Usl"] # Prepare dataframe with return format new_limits_df = new_limits_df.merge( df_cumulative_raw[["TestId", "TestSuiteName", "Testname", "pins"]].drop_duplicates(), on = "TestId", how="left" ) # Pick return columns and drop duplicates new_limits_df = new_limits_df[[ "TestId", "TestSuiteName", "Testname", "pins", "PassRangeLsl", "PassRangeUsl", ]].drop_duplicates() out_time = time.time() self.logger.info("Compute Setup Test Time=%.3f} sec" % (out_time - in_time)) # Parameter set by user, persists result in a file if args.get("saveStat"): self.save_stat( new_limits_df, df_cumulative_raw, args ) self.logger.info("=> End Compute") return new_limits_df def format_result(self, result_df: pd.DataFrame) -> str: """Convert dataframe to string with variables to be used in VariableControl Follows this format: TESTID0,value TESTID1,value TESTID2,value """ new_limits_df = result_df.copy() new_limits_df.sort_values(by="TestId").reset_index(drop=True, inplace=True) new_limits_df["test_id_var"] = "TestId" + new_limits_df.index.astype(str) + "," + \ new_limits_df["TestId"].astype(str) test_ids = ' '.join(new_limits_df["test_id_var"]) new_limits_df["limits_lsl"] = "limit_lsl." + new_limits_df["TestId"].astype(str) + \ "," + new_limits_df["PassRangeLsl"].astype(str) new_limits_df["limits_usl"] = "limit_usl." + new_limits_df["TestId"].astype(str) + \ "," + new_limits_df["PassRangeUsl"].astype(str) limits_lsl = ' '.join(new_limits_df["limits_lsl"]) limits_usl = ' '.join(new_limits_df["limits_usl"]) variable_command = limits_lsl + " " + test_ids + " " + limits_lsl + " " + limits_usl return variable_command def datalog(self,) -> str: """Prepare data and compute new limits Returns: variable_command: formatted command with new_limits after compute """ nexus_data = self.nexus_data df_base_limits = self.df_base_limits df_cumulative_raw = self.df_cumulative_raw args = self.args self.logger.info("=> Starting Datalog") start = time.time() # Read string from nexus. Note that this does not read a csv file. current_result_datalog = nexus_data # Prepare base values and columns df_cumulative_raw = df_cumulative_raw[["TestId", "sum", "sum_of_squares", "nbr_executions"]] df_cumulative_raw = df_cumulative_raw.merge(current_result_datalog, on="TestId") df_cumulative_raw["nbr_executions"] = df_cumulative_raw["nbr_executions"] + 1 df_cumulative_raw["sum"] = df_cumulative_raw["sum"] + df_cumulative_raw["PinValue"] df_cumulative_raw["sum_of_squares"] = ( df_cumulative_raw["sum_of_squares"] + df_cumulative_raw["PinValue"] * df_cumulative_raw["PinValue"] ) df_cumulative_raw = df_cumulative_raw.merge( df_base_limits, on="TestId", how="left" ) # Compute new limits according to stdev and IQR methods new_limits_df = self.compute( df_base_limits, df_cumulative_raw, args ) end = time.time() self.logger.info( "Total Setup/Parallel Computation and Return result Test Time=%f" % (end-start) ) self.logger.info("=> End of Datalog:") # Format the result in Nexus Variable format return new_limits_df.copy(), self.format_result(new_limits_df) def preprocess_data(self, base_limits: dict) -> pd.DataFrame: """Initialize dataframes with base values before they can be computed Args: base_limits: Dict that contains base limits and parameters, extracted from json file Returns: df_base_limits: DataFrame with base_limits and parameters cumulative_statistics: DataFrame with base columns and values to be used in compute """ self.logger.info("=>Beginning of Init") df_base_limits = pd.DataFrame.from_dict(base_limits, orient="index") df_base_limits["TestId"] = df_base_limits.index.astype(int) df_base_limits.reset_index(inplace=True, drop=True) # Pick only columns of interest cumulative_statistics = df_base_limits[ ["TestId", "PassRangeUsl", "PassRangeLsl", "DPAT_Sigma"] ].copy() # Set 0 to base columns cumulative_statistics["nbr_executions"] = 0 cumulative_statistics["sum_of_squares"] = 0 cumulative_statistics["sum"] = 0 self.logger.info("=>End of Init") return df_base_limits, cumulative_statistics

workdir/oneapi.py:

By using OneAPI, this code performs the following functions:Retrieves test data from the Host Controller and sends it to the DPAT algorithm.

Receives the DPAT results from the algorithm and sends them back to the Host Controller.

Click to expand!

from liboneAPI import Interface from liboneAPI import AppInfo from sample import SampleMonitor from sample import sendCommand import signal import sys import pandas as pd from AdvantestLogging import logger def send_command(result_str, command): # name = command; # param = "DriverEvent LotStart" # sendCommand(name, param) name = command param = "DriverEvent LotStart" sendCommand(name, param) name = command param = "Config Enabled=1 Timeout=10" sendCommand(name, param) name = command param = "Set " + result_str sendCommand(name, param) logger.info(result_str) class OneAPI: def __init__(self, callback_fn, callback_args): self.callback_args = callback_args self.get_suite_config(callback_args["config_path"]) self.monitor = SampleMonitor( callback_fn=callback_fn, callback_args=self.callback_args ) def get_suite_config(self, config_path): # conf/test_suites.ini test_suites = [] with open(config_path) as f: for line in f: li = line.strip() if not li.startswith("#"): test_suites.append(li.split(",")) self.callback_args["test_suites"] = pd.DataFrame( test_suites, columns=["testNumber", "testName"] ) def start( self, ): signal.signal(signal.SIGINT, quit) logger.info("Press 'Ctrl + C' to exit") me = AppInfo() me.name = "sample" me.vendor = "adv" me.version = "2.1.0" Interface.registerMonitor(self.monitor) NexusDataEnabled = True # whether to enable Nexus Data Streaming and Control TPServiceEnabled = ( True # whether to enable TPService for communication with NexusTPI ) res = Interface.connect(me, NexusDataEnabled, TPServiceEnabled) if res != 0: logger.info(f"Connect fail. code = {res}") sys.exit() logger.info("Connect succeed.") signal.signal(signal.SIGINT, quit) while True: signal.pause() def quit( self, ): sys.exit()

Login to ACS Container Hub.

sudo docker login registry.advantest.com --username ChangeToUserName --password ChangeToSecret

Build and upload docker image to ACS Container Hub.

Build image

cd ~ curl http://10.44.5.139/docker/python39-basic20.tar.zip -O unzip -o python39-basic20.tar.zip sudo docker load -i python39-basic20.tar cd ~/apps/application-dpat-v3.1.0 sudo docker build --tag registry.advantest.com/adv-dpat/adv-dpat-v1:ChangeToImageTag rd-app_dpat_py/.

Push image

sudo docker push registry.advantest.com/adv-dpat/adv-dpat-v1:ChangeToImageTag

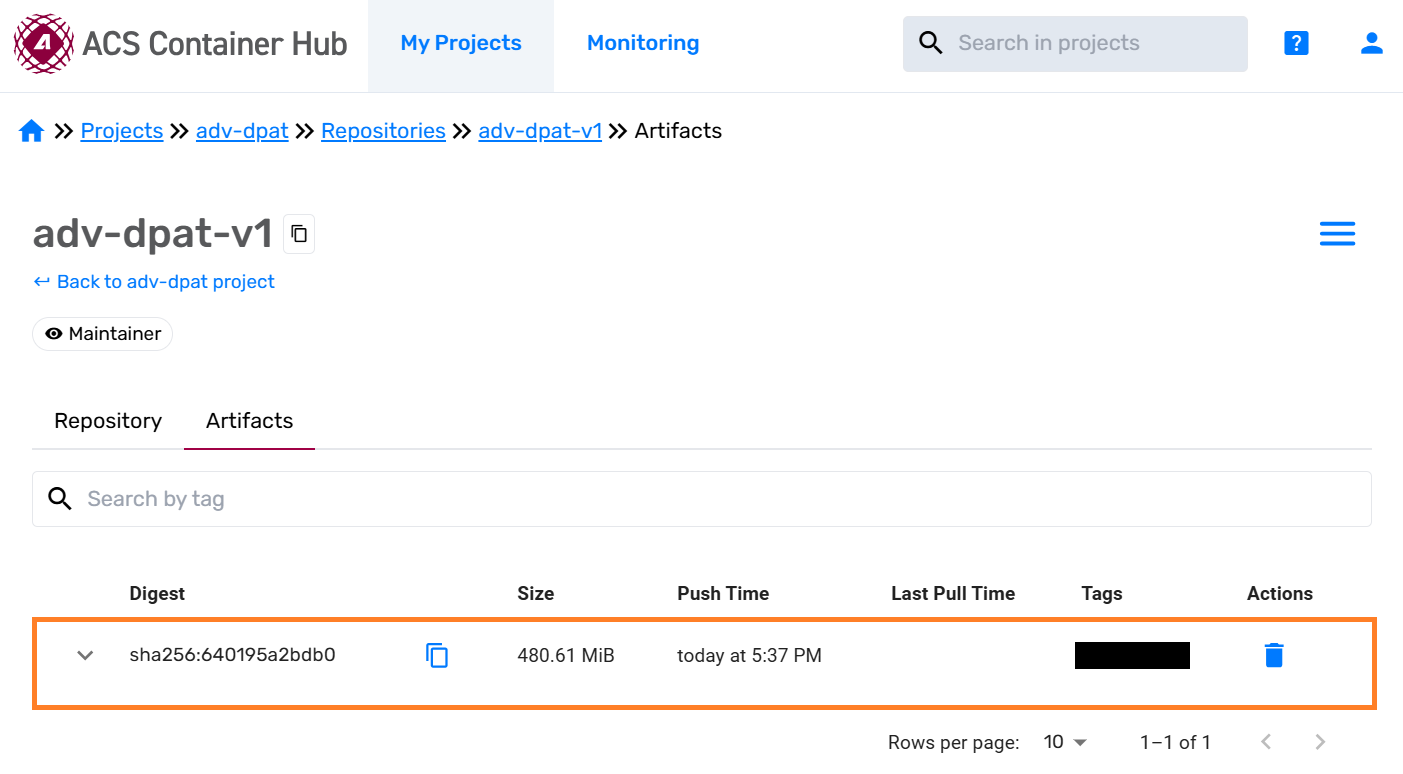

You can see that the docker image has been uploaded on the Container Hub.

4. Config acs_nexus system service.#

Change service configuration

Edit /opt/acs/nexus/conf/acs_nexus.ini file.

Make sure the Auto_Deploy option is false

[Auto_Deploy] Enabled=false

Make sure the Auto_Popup option is true

[GUI] Auto_Popup=true Auto_Close=true

Edit the image setting file /opt/acs/nexus/conf/images.json

Type the Edge Server private IP in the “address” field

Type the Container Hub user name and docker secret in the “user” and “password” field

Type your own container image tag in the “image” field

Type the Host Controller private IP in the “environment” field

{ "selector": { "device_name": "demo" }, "edge": { "address": "<Edge Private IP>", "registry": { "address": "registry.advantest.com", "user": "<Container Hub User Name>", "password": "<Docker Secret>" }, "containers": [ { "name": "dpat-app", "image": "adv-dpat/adv-dpat-v1:<Your Own Image Tag>", "requirements": { "gpu": false, "mapped_ports": [ ] }, "environment": { "ONEAPI_CONTROL_ZMQ_IP": "<Host Controller Private IP>" } } ] } }

Restart acs_nexus service to apply the changes.

sudo systemctl restart acs_nexus

5. Run the SmarTest test program.#

Start SmarTest 7.

Open terminal and run:

cd ~/apps/application-dpat-v3.1.0 sh start_smt7.sh

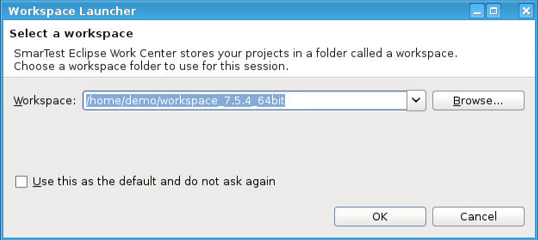

Wait a few seconds for SmarTest7 to start. After that, select your workspace or create a new one.

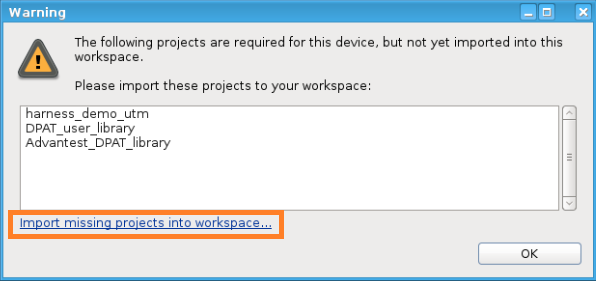

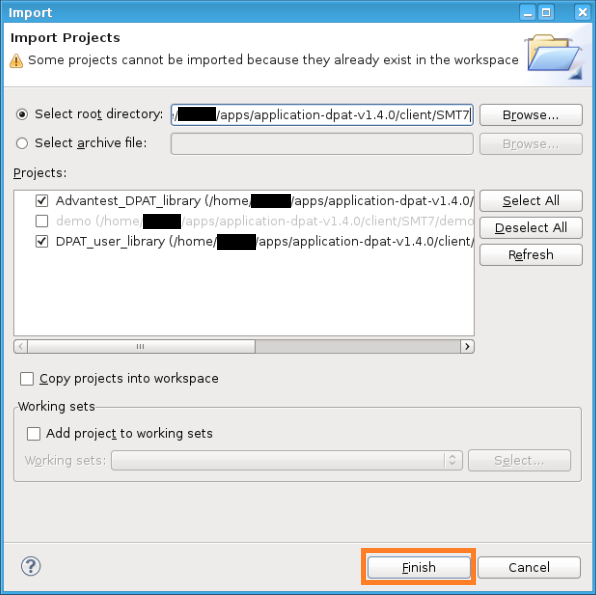

Click “Import missing projects into workspace”

Click “Finish” to import “DPAT_user_library” to the workspace

In “DPAT_user_library” project, you can find these test method source files:

UpdateNexusVariables.cpp: In this test method, it will get test limits for each test suite from Nexus, but the test limits are computed in Edge DPAT container app.

Click to expand!

/* Copyright 2023 ADVANTEST CORPORATION. All rights reserved */ #include "testmethod.hpp" #include "TestTableMgr.hpp" #include "sys/time.h" #include "mapi.hpp" #include <unistd.h> #include <string> #include <sstream> #include "ACSEdge/ACSEdgeConn.hpp" #include "./ACSEdge/DPATACSEdgeLibrary.hpp" #include "UpdateNexusVariables.hpp" #include "VariableControl.h" #include "jsmn.h" using V93kLimits::tmLimits; using namespace std; #define SSTR( x ) static_cast< std::ostringstream & >( \ ( std::ostringstream() << std::dec << x ) ).str() NexusVariables::VariableControl Nexus_vars; using namespace std; /** * Test method class. * * For each testsuite using this test method, one object of this * class is created. */ map<string, vector<string> > parseNewLimits (NexusVariables::VariableControl Nexus_vars){ map<string, vector<string> > new_limits; double test_id; double limit_lsl; double limit_usl; int res = 1; int idx = 0; while(res != 0){ vector<string> new_limit; res = Nexus_vars.getNexusVariable("TestId" + SSTR(idx), test_id); Nexus_vars.getNexusVariable("limit_lsl." + SSTR(test_id), limit_lsl); Nexus_vars.getNexusVariable("limit_usl." + SSTR(test_id), limit_usl); cout << limit_usl << endl; new_limit.push_back(SSTR(test_id)); new_limit.push_back("NA"); new_limit.push_back("NA"); new_limit.push_back("pins"); new_limit.push_back(SSTR(limit_lsl)); new_limit.push_back(SSTR(limit_usl)); new_limits.insert(make_pair(SSTR(test_id), new_limit)); idx++; } /*for(map<string, vector<string> >::const_iterator it = new_limits.begin(); it != new_limits.end(); ++it) { std::cout << it->first << "\n"; for (int i=0; i< it->second.size(); ++i) std::cout << it->second[i] << ' '; }*/ return new_limits; } map<string, vector<string> > parseJsonToNewLimits (const std::string& jsonStr){ jsmn_parser parser; jsmntok_t tokens[100]; jsmn_init(&parser); int r = jsmn_parse(&parser, jsonStr.c_str(), jsonStr.length(), tokens, sizeof(tokens)/sizeof(tokens[0])); map<string, vector<string> > new_limits; // return blank if it is not JSON format if (r < 1 || tokens[0].type != JSMN_OBJECT) { cout << "Warning: [UpdateNexusVariable] The string from NexusTPI is not JSON format." << endl; return new_limits; } string key = string(jsonStr.c_str() + tokens[1].start, tokens[1].end - tokens[1].start); // return blank if key is not "CalcResult" if (key != "CalcResult") { cout << "Warning: [UpdateNexusVariable] The string from NexusTPI is not correct format to parse." << endl; return new_limits; } // parse the new limits int arraySize = tokens[2].size; int i = 3; for (int obj = 0; obj < arraySize; ++obj) { vector<string> new_limit; string subkey = string(jsonStr.c_str() + tokens[i+1].start, tokens[i+1].end - tokens[i+1].start); string limit_lsl = string(jsonStr.c_str() + tokens[i+3].start, tokens[i+3].end - tokens[i+3].start); string limit_usl = string(jsonStr.c_str() + tokens[i+4].start, tokens[i+4].end - tokens[i+4].start); new_limit.push_back(SSTR(subkey)); new_limit.push_back("NA"); new_limit.push_back("NA"); new_limit.push_back("pins"); new_limit.push_back(SSTR(limit_lsl)); new_limit.push_back(SSTR(limit_usl)); new_limits.insert(make_pair(SSTR(subkey), new_limit)); i += 5; } /*for(map<string, vector<string> >::const_iterator it = new_limits.begin(); it != new_limits.end(); ++it) { std::cout << it->first << "\n"; for (int i=0; i< it->second.size(); ++i) std::cout << it->second[i] << ' '; }*/ return new_limits; } class UpdateNexusVariables: public testmethod::TestMethod { protected: /** *Initialize the parameter interface to the testflow. *This method is called just once after a testsuite is created. */ virtual void initialize() { //Add your initialization code here //Note: Test Method API should not be used in this method! } /** *This test is invoked per site. */ virtual void run() { // if(IS_FIRST_RUN()) { // // } ON_FIRST_INVOCATION_BEGIN(); Nexus_vars = NexusVariables::VariableControl(); sleep(5); bool err = Nexus_vars.updateNexusVariable(); map<string, vector<string> > newLimits = parseNewLimits(Nexus_vars); Advantest_DPAT_library_Library_Class& Advantest_DPAT_library = Advantest_DPAT_library_Library_Class::getInstance(); Advantest_DPAT_library.changeLimitbyTestNumber(newLimits); cout << err << endl; ON_FIRST_INVOCATION_END(); return; } /** *This function will be invoked once the specified parameter's value is changed. *@param parameterIdentifier */ virtual void postParameterChange(const string& parameterIdentifier) { //Add your code //Note: Test Method API should not be used in this method! return; } /** *This function will be invoked once the Select Test Method Dialog is opened. */ virtual const string getComment() const { string comment = " please add your comment for this method."; return comment; } }; REGISTER_TESTMETHOD("UpdateNexusVariables", UpdateNexusVariables);

DPAT_ACSEgde_New_Limits.cpp: In this test method, it will apply the test limit to each running test suite.

Click to expand!

/* Copyright 2023 ADVANTEST CORPORATION. All rights reserved */ #include "testmethod.hpp" #include "mapi.hpp" #include "ACSEdge/ACSEdgeConn.hpp" #include "TestTableMgr.hpp" #include "sys/time.h" #include "NexusTPI.h" #include "./ACSEdge/DPATACSEdgeLibrary.hpp" #include "UpdateNexusVariables.hpp" using V93kLimits::tmLimits; using namespace std; NexusTPI& tpi = NexusTPI::getInstance(); class DPAT_ACSEgde_New_Limits: public testmethod::TestMethod { protected: int VCFlag; // 1: use VariableControl, 0: use NexusTPI string appName; // the name of the application that sends the command string appLocation; // the location of the application, can be set "edge" or "aus" protected: virtual void initialize() { VCFlag = 0 ; appName = "adv-dpat"; appLocation = "edge"; } virtual void run() { static int error = 0; ON_FIRST_INVOCATION_BEGIN(); { map<string, vector<string> > newLimits; if (VCFlag) { cout << "[DPAT_ACSEgde_New_Limits] Use VariableControl to retrieve the new limit" << endl; newLimits = parseNewLimits(Nexus_vars); }else{ cout << "[DPAT_ACSEgde_New_Limits] Use NexusTPI to retrieve the new limit" << endl; string cmd = "{\"request\":\"SMT7_CalcNewLimits\"}"; NexusTPICode res_request = tpi.target(appName).location(appLocation).request(cmd); cout << "res_request:" << res_request << endl; if(res_request == 0){ std::string json = tpi.getResponse(); newLimits = parseJsonToNewLimits(json); } } Advantest_DPAT_library_Library_Class& Advantest_DPAT_library = Advantest_DPAT_library_Library_Class::getInstance(); Advantest_DPAT_library.changeLimitbyTestNumber(newLimits); } ON_FIRST_INVOCATION_END(); //Nexus_vars.printAllVars(); TEST("","Limit_Change",tmLimits,error,FALSE); return; } virtual void postParameterChange(const string& parameterIdentifier) { return; } virtual const string getComment() const { string comment = " please add your comment for this method."; return comment; } }; REGISTER_TESTMETHOD("DPAT_ACSEgde_New_Limits", DPAT_ACSEgde_New_Limits);

PAT_simulator.cpp: In this test method, it will generate test data and write it to datalog, so the Nexus can capture the data and send it to Edge DPAT container app.

Click to expand!

#include "testmethod.hpp" //for test method API interfaces #include "mapi.hpp" #include <cmath> #include <cstdlib> using namespace std; using namespace V93kLimits; /** * Test method class. * * For each testsuite using this test method, one object of this * class is created. */ class PAT_simulator: public testmethod::TestMethod { protected: /** *Initialize the parameter interface to the testflow. *This method is called just once after a testsuite is created. * *Note: Test Method API should not be used in this method. */ int loops; virtual void initialize() { addParameter("Loops", "int", &loops, testmethod::TM_PARAMETER_INPUT); } /** *This test is invoked per site. */ virtual void run() { static double result1; static double u1; static double u2; ON_FIRST_INVOCATION_BEGIN(); { u1=((double) (rand()) + 1. )/((double)(RAND_MAX) + 1. ); u2=((double) (rand()) + 1. )/((double)(RAND_MAX) + 1. ); result1 = 0.25*cos(6.2*atan(1.)*u2) * sqrt(-2.0*log(u1)) + 0.5; } ON_FIRST_INVOCATION_END(); stringstream ss; for (int i=0;i<loops;i++) { ss.str(""); ss << i; string testname = ss.str(); TEST("",testname,tmLimits,result1,TM::CONTINUE); } // TESTSET().cont(1).judgeAndLog_ParametricTest("","ThresholdA",tmLimits,result1); // TESTSET().cont(1).judgeAndLog_ParametricTest("","ThresholdB",tmLimits,result2); // Working //TEST(param.expandedPins[j],testLimit.limit[FIRST_POL].testname,tmLimits.getLimitObj(testLimit.limit[FIRST_POL].testname,param.expandedPins[j]),dMeasValue,TM::CONTINUE); // Not working // TestSet.cont(true).judgeAndLog_ParametricTest("CONFIG","passVolt_mV",tmLimits,1234); // TestSet.cont(true).judgeAndLog_ParametricTest("CLK125","passVolt_mV",tmLimits,4321); // Not Working //TESTSET().cont(TM::CONTINUE).judgeAndLog_ParametricTest("CONFIG","passVolt_mV",tmLimits.getLimitObj("passVolt_mV","CONFIG"),1234); //TESTSET().cont(TM::CONTINUE).judgeAndLog_ParametricTest("CLK125","passVolt_mV",tmLimits.getLimitObj("passVolt_mV","CLK125"),4321); // Working // TestSet.cont(true).judgeAndLog_ParametricTest("CONFIG","passVolt_mV",tmLimits.getLimitObj("passVolt_mV","CONFIG"),1234); // TestSet.cont(true).judgeAndLog_ParametricTest("CLK125","passVolt_mV",tmLimits.getLimitObj("passVolt_mV","CLK125"),4321); // Working //TEST("CONFIG","passVolt_mV",tmLimits.getLimitObj("passVolt_mV","CONFIG"),1234,TM::CONTINUE); //TEST("CLK125","passVolt_mV",tmLimits.getLimitObj("passVolt_mV","CLK125"),4321,TM::CONTINUE); return; } /** *This function will be invoked once the specified parameter's value is changed. *@param parameterIdentifier * *Note: Test Method API should not be used in this method. */ virtual void postParameterChange(const string& parameterIdentifier) { //Add your code return; } /** *This function will be invoked once the Select Test Method Dialog is opened. */ virtual const string getComment() const { string comment = " please add your comment for this method."; return comment; } }; REGISTER_TESTMETHOD("PAT_simulator", PAT_simulator);

Run DPAT test program

Open the testflow file, you can see the following test suites beeing called in the flow.

UpdateNexusVariables: This is used for received computed DPAT data from Nexus

ACSEdge_DPAT_Limit_Change: This is used for changing test limits

TestA / TestB / TestC: This is used for generating DPAT raw data then output to datalog, the Nexus automatically will fetch data from datalog and send it to Container Hub DPAT app

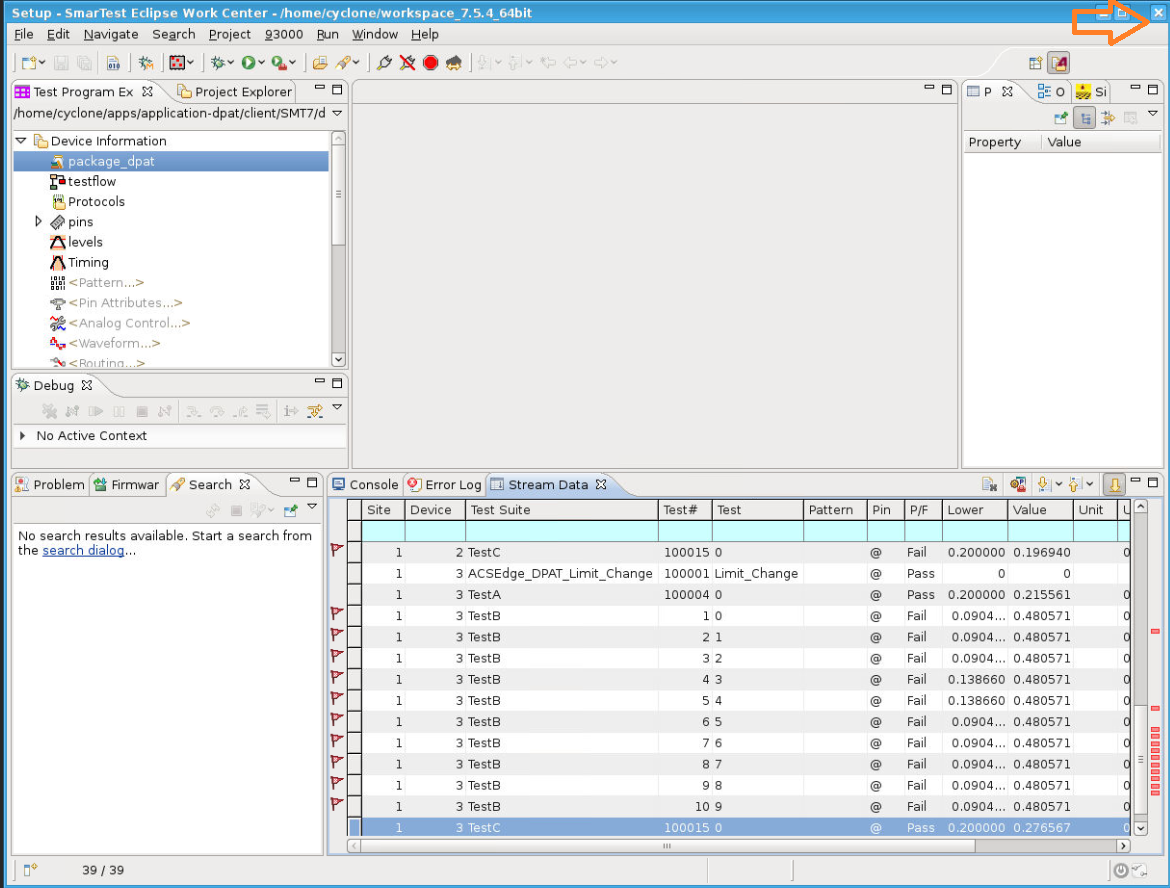

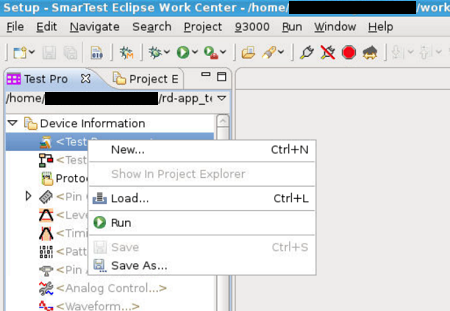

Load test program “package_dpat”: right-click on the Testprogram entry in the “Test Program Explorer” (located in the left panel) and then select “Load…”

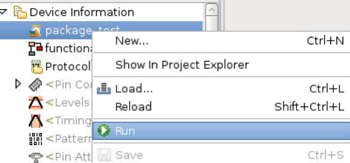

Run the test program “package_dpat”: right-click on “package dpat” and then click “Run”.

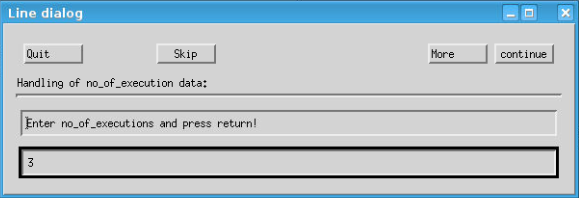

Type in “3” in popped up test number dialog, then press “enter” on the keyboard.

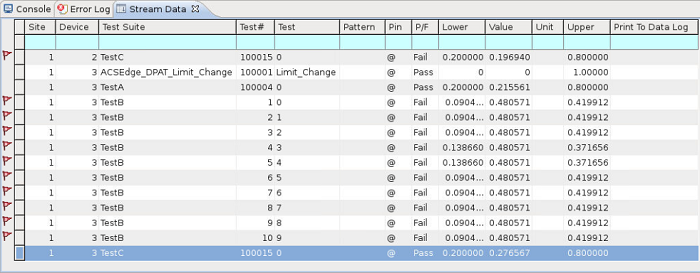

Visualize Results#

To observe the limit changes, open the Result Perspective in SmarTest7. In the Stream Data tab, filter the Test Suite to show only TestB:

Note: You may not get the data on the first attempt if the operation is fast. However, you can obtain the data after running the second attempt.

Quit SmarTest